Why Quantify

Let Facts Speak

Using data to reflect collaboration outcomes and the quality of delivered results is a way to surface problems from another objective angle. Avoid subjective judgments like “I think” or “I feel” when evaluating complex problems.

Diverse Roles, Complex Collaboration

An iteration often involves many roles. Their actions and outputs all influence “iteration quality,” and those changes are usually complex. That’s why we should collect objective facts across multiple dimensions and evaluate iteration quality comprehensively.

Iteration Should Also Iterate

Product optimization and iteration are continuous. At different stages, companies and products have very different quality requirements. The famous project management triangle is a trade-off among time, cost, and scope, and quality is affected accordingly. As the company and product evolve, we need to collect data from multiple angles and continuously analyze iteration quality, so we can make the right trade-offs for the current context and reach our product goals.

How to Quantify

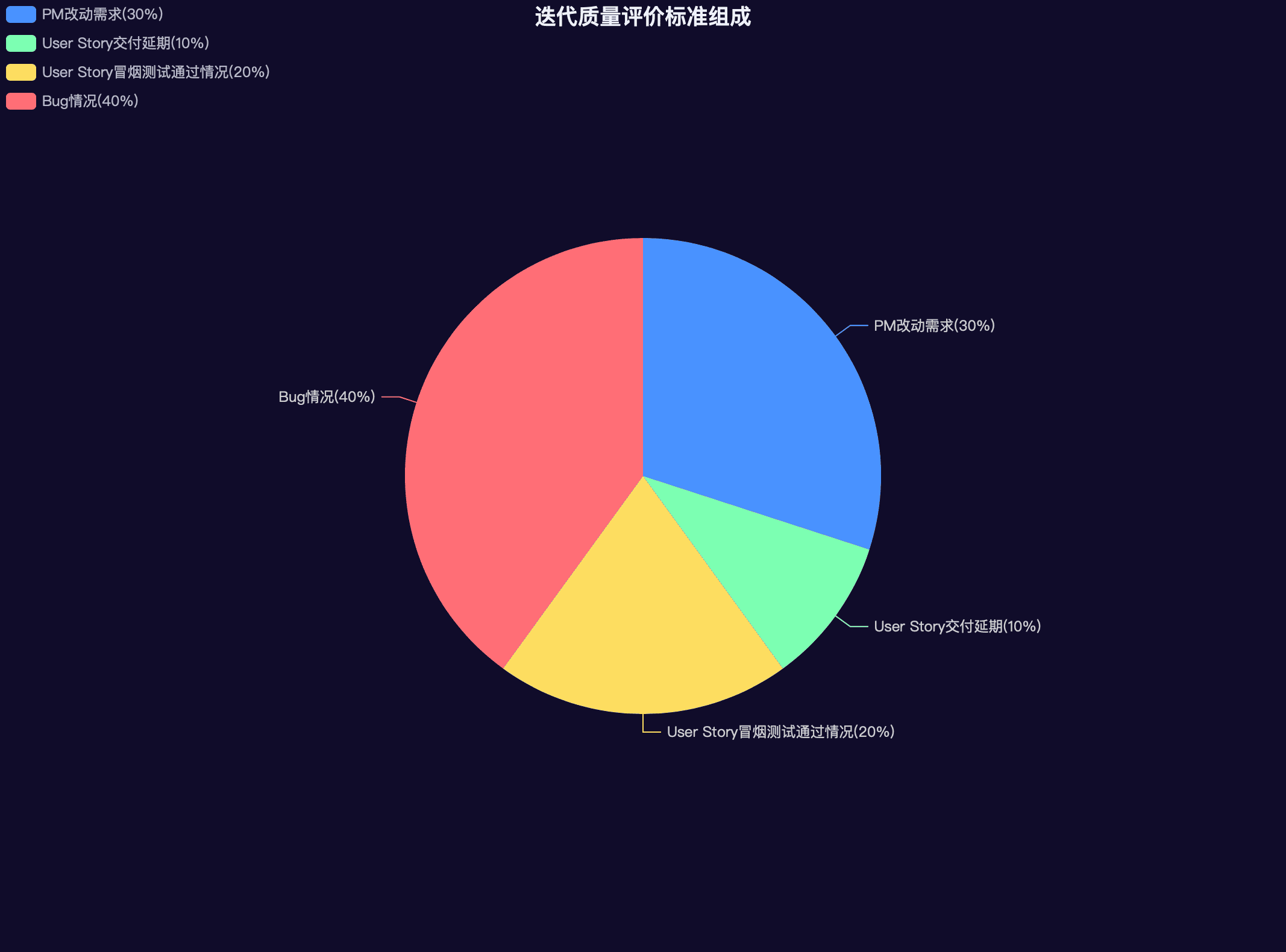

Within our team, we use a standard to evaluate iteration quality, called the “Iteration Quality Evaluation Standard,” composed as follows.

Components of the Iteration Quality Evaluation Standard

4 dimensions + 1 score

- 4 dimensions

- PM requirement changes (30%)

- User Story delivery delays (10%)

- User Story smoke test pass rate (20%)

- Bugs (40%)

- 1 score

- Iteration quality score

| Full score 10, pass 6 | Dimension | Weight | Score |

|---|---|---|---|

| PM requirement changes (after PRD finalization) | 30% | 3 | |

| User Story delivery testing delays | 10% | 1 | |

| User Story smoke test pass rate | 20% | 2 | |

| Bugs (n = total bugs / total story points) | 40% | 4 |

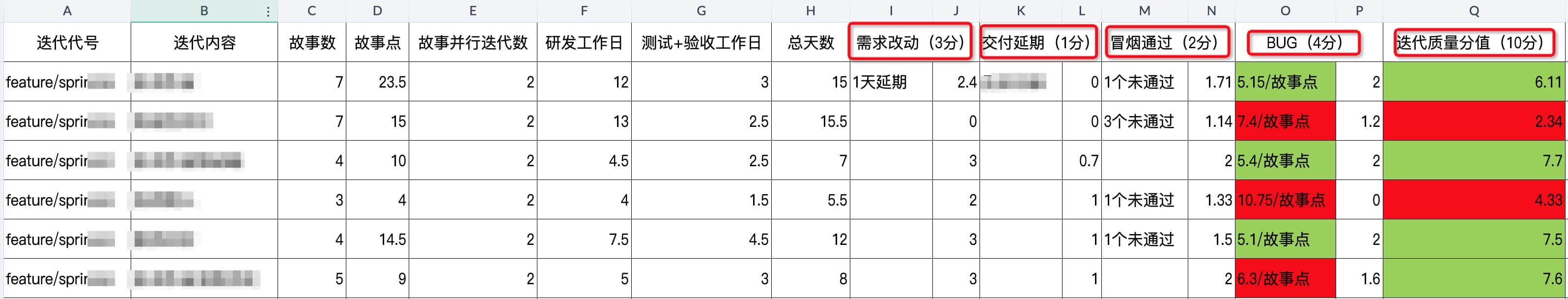

Example

Above is a recent set of iteration data from my team. Context:

- Story points: 1 story point = 1 person-day

- Iteration flow: develop & test by story → overall regression testing → PM & UI acceptance → release → retrospective

What Datafication Brings to the Team

- The team is accountable for the iteration

- By presenting iteration quality with data, the team can review problems rationally and find solutions. The shared goal is: make the iteration better.

- Make “problems” transparent

- Provide a solid data foundation to improve team iteration effectiveness (any change can be reflected in data)

Things We Want to Do but Haven’t Yet

By connecting the agile tools we use and automating iteration data collection, we can generate an “iteration quality score” and compare historical data across dimensions, thereby improving efficiency.